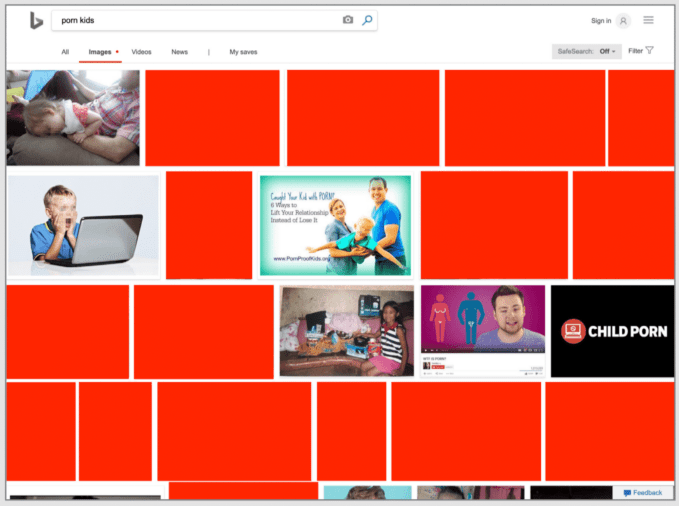

Illegal child exploitation imagery is easy to find on Microsoft’s Bing search engine. But even more alarming is that Bing will suggest related keywords and images that provide pedophiles with more child pornography. Following an anonymous tip, TechCrunch commissioned a report from online safety startup AntiToxin to investigate. The results were alarming.

Bing searches can return illegal child abuse imagery

[WARNING: Do not search for the terms discussed in this article on Bing or elsewhere as you could be committing a crime. AntiToxin is closely supervised by legal counsel and works in conjunction with Israeli authorities to perform this research and properly hand its findings to law enforcement. No illegal imagery is contained in this article, and it has been redacted with red boxes here and inside AntiToxin’s report.]

The research found that terms like “porn kids,” “porn CP” (a known abbreviation for “child pornography”) and “nude family kids” all surfaced illegal child exploitation imagery. And even people not seeking this kind of disgusting imagery could be led to it by Bing.

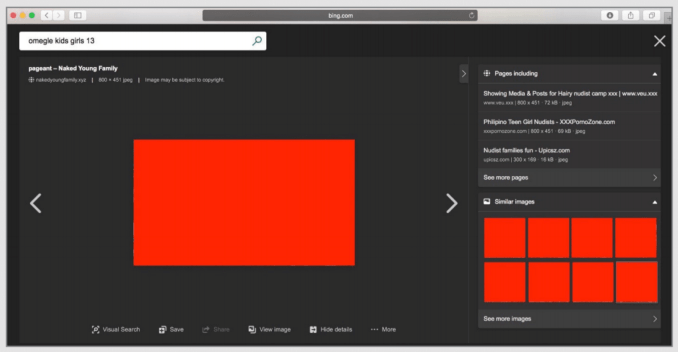

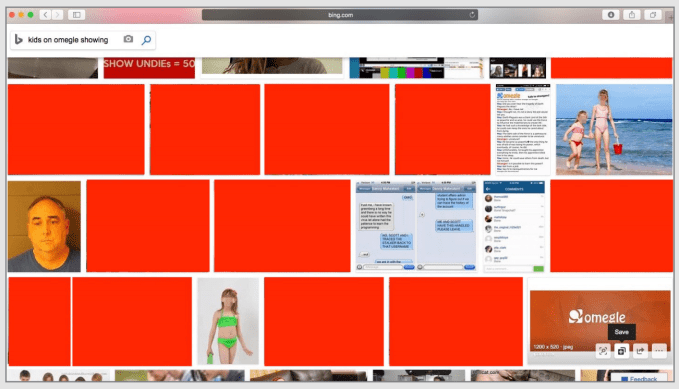

When researchers searched for “Omegle Kids,” referring to a video chat app popular with teens, Bing’s auto-complete suggestions included “Omegle Kids Girls 13” that revealed extensive child pornography when searched. And if a user clicks on those images, Bing showed them more illegal child abuse imagery in its Similar Images feature. Another search for “Omegle for 12 years old” prompted Bing to suggest searching for “Kids On Omegle Showing,” which pulled in more criminal content.

Bing’s Similar Images feature can suggest additional illegal child abuse imagery

The evidence shows a massive failure on Microsoft’s part to adequately police its Bing search engine and to prevent its suggested searches and images from assisting pedophiles. Similar searches on Google did not produce as clearly illegal imagery or as much concerning content as did Bing. Internet companies like Microsoft Bing must invest more in combating this kind of abuse through both scalable technology solutions and human moderators. There’s no excuse for a company like Microsoft, which earned $8.8 billion in profit last quarter, to be underfunding safety measures.

TechCrunch received an anonymous tip regarding the disturbing problem on Bing after my reports last month regarding WhatsApp child exploitation image trading group chats, the third-party Google Play apps that make these groups easy to find, and how these apps ran Google and Facebook’s ad networks to make themselves and the platforms money. In the wake of those reports, WhatsApp banned more of these groups and their members, Google kicked the WhatsApp group discovery apps off Google Play and both Google and Facebook blocked the apps from running their ads, with the latter agreeing to refund advertisers.

Unsafe search

Following up on the anonymous tip, TechCrunch commissioned AntiToxin to investigate the Bing problem, which conducted research from December 30th, 2018 to January 7th, 2019 with proper legal oversight. Searches were conducted on the desktop version of Bing with “Safe Search” turned off. AntiToxin was founded last year to build technologies that protect networks against bullying, predators and other forms of abuse. [Disclosure: The company also employs Roi Carthy, who contributed to TechCrunch from 2007 to 2012.]

AntiToxin CEO Zohar Levkovitz tells me that “Speaking as a parent, we should expect responsible technology companies to double, and even triple-down to ensure they are not adding toxicity to an already perilous online environment for children. And as the CEO of AntiToxin Technologies, I want to make it clear that we will be on the beck and call to help any company that makes this its priority.” The full report, published for the first time, can be found here and embedded below:

( function() {

var func = function() {

var iframe = document.getElementById(‘wpcom-iframe-8f17a2aa1b1c804d8acce208ffdd615e’)

if ( iframe ) {

iframe.onload = function() {

iframe.contentWindow.postMessage( {

‘msg_type’: ‘poll_size’,

‘frame_id’: ‘wpcom-iframe-8f17a2aa1b1c804d8acce208ffdd615e’

}, “https://tcprotectedembed.com” );

}

}

// Autosize iframe

var funcSizeResponse = function( e ) {

var origin = document.createElement( ‘a’ );

origin.href = e.origin;

// Verify message origin

if ( ‘tcprotectedembed.com’ !== origin.host )

return;

// Verify message is in a format we expect

if ( ‘object’ !== typeof e.data || undefined === e.data.msg_type )

return;

switch ( e.data.msg_type ) {

case ‘poll_size:response’:

var iframe = document.getElementById( e.data._request.frame_id );

if ( iframe && ” === iframe.width )

iframe.width = ‘100%’;

if ( iframe && ” === iframe.height )

iframe.height = parseInt( e.data.height );

return;

default:

return;

}

}

if ( ‘function’ === typeof window.addEventListener ) {

window.addEventListener( ‘message’, funcSizeResponse, false );

} else if ( ‘function’ === typeof window.attachEvent ) {

window.attachEvent( ‘onmessage’, funcSizeResponse );

}

}

if (document.readyState === ‘complete’) { func.apply(); /* compat for infinite scroll */ }

else if ( document.addEventListener ) { document.addEventListener( ‘DOMContentLoaded’, func, false ); }

else if ( document.attachEvent ) { document.attachEvent( ‘onreadystatechange’, func ); }

} )();

TechCrunch provided a full list of troublesome search queries to Microsoft along with questions about how this happened. Microsoft’s chief vice president of Bing & AI Products Jordi Ribas provided this statement: “Clearly these results were unacceptable under our standards and policies and we appreciate TechCrunch making us aware. We acted immediately to remove them, but we also want to prevent any other similar violations in the future. We’re focused on learning from this so we can make any other improvements needed.”

A search query suggested by Bing surfaces illegal child abuse imagery

Microsoft claims it assigned an engineering team that fixed the issues we disclosed and it’s now working on blocking any similar queries as well problematic related search suggestions and similar images. However, AntiToxin found that while some search terms from its report are now properly banned or cleaned up, others still surface illegal content.

The company tells me it’s changing its Bing flagging options to include a broader set of categories users can report, including “child sexual abuse.” When asked how the failure could have occurred, a Microsoft spokesperson told us that “We index everything, as does Google, and we do the best job we can of screening it. We use a combination of PhotoDNA and human moderation but that doesn’t get us to perfect every time. We’re committed to getting better all the time.”

BELLEVUE, WA – NOVEMBER 30: Microsoft CEO Satya Nadella (Photo by Stephen Brashear/Getty Images)

Microsoft’s spokesperson refused to disclose how many human moderators work on Bing or whether it planned to increase its staff to shore up its defenses. But they then tried to object to that line of reasoning, saying, “I sort of get the sense that you’re saying we totally screwed up here and we’ve always been bad, and that’s clearly not the case in the historic context.” The truth is that it did totally screw up here, and the fact that it pioneered illegal imagery detection technology PhotoDNA that’s used by other tech companies doesn’t change that.

The Bing child pornography problem is another example of tech companies refusing to adequately reinvest the profits they earn into ensuring the security of their own customers and society at large. The public should no longer accept these shortcomings as repercussions of tech giants irresponsibly prioritizing growth and efficiency. Technology solutions are proving insufficient safeguards, and more human sentries are necessary. These companies must pay now to protect us from the dangers they’ve unleashed, or the world will be stuck paying with its safety.

Source: Tech Crunch