The Amazon Echo and Google Home are amazing devices and both have advantages over the other. In my home, we use the Amazon Echo and have them around the house and outside. I have the original in the living room, a Dot in bedrooms, my office and outside, a Tap in my woodworking workshop and Spots in the kids’ room (with tape over the camera). They’re great devices but far from perfect. They’re missing several key features and the Google Home is missing the same things, too.

I polled the TechCrunch staff. The following are the features we would like to see in the next generation of these devices.

IR Blaster

Right now, it’s possible to have the Echo and Home control a TV, but only through 3rd party devices. If the Echo or Home had a top-mounted 360-degree IR Blaster, the smart speakers could natively control TVs, entertainment systems, and heating and cooling units.

Echo and Homes are naturally placed out in the open, making the devices well suited to control devices sporting an infrared port. Saying “turn on the TV” or “turn on the AC” could trigger the Echo to broadcast the IR codes from the Echo to the TV or wall-mounted AV unit.

This would require Amazon and Google to integrate a complete universal remote scheme into the Echo and Home. That’s not a small task. Companies such as Logitech’s Harmony, Universal Remote Control and others are dedicated to ensuring their remotes are compatible with everything on the market. It seems like an endless battle of discovering new IR codes, but one I wish Amazon and Google would tackle. I would like to be able to control my electric fireplace and powered window shades with my Echo without any hassle.

A dedicated app for music and the smart home

The current Home and Alexa apps are bloated and unusable for daily use. I suspect that’s by design, as it forces the users to use the speaker for most tasks. The Echo and Home deserve better.

Right now, Amazon and Google seemingly want users to use voice to set up these devices. And that’s fine to a point. If a user is going to use these speakers for listening to Spotify or controlling a set of Hue lights, the current app and voice setup works fine. But if a user wants an Echo to control a handful of smart home devices from different vendors, a dedicated app for the smart home ecosystem should be available — bonus points if there’s a desktop app for even more complex systems.

Look at Sonos. The Sonos One is a fantastic speaker and arguably the best sounding multi-room speaker system. Even though Alexa is built into the speaker, the Sonos app is still useful as it would be for the Echo and Home, too. A dedicated music app would let Echo and Home users more easily browse music sources and select tracks and control playback on different devices.

The smart speakers can be the center of complex smart home ecosystems and deserve a competent companion app for setup and maintenance.

Logitech’s Harmony app is a good example here as well. This desktop app allows users to set up multiple universal remotes. The same should be available for Echo and Home devices. For example, my kids have their own Spotify accounts and do not need voice access to my Vivint home security system or the Hue bulbs in the living room. I want a way to more easily customize the Echo devices throughout the home. Setting up such a system is currently not possible and would be clunky and tiresome to do through a mobile app unless it’s dedicated to the purpose.

Mesh networking

Devices such as Eero and Netgear’s Orbi line are popular because they easily flood an area with wi-fi that’s faster and more reliable than wi-fi broadcasted by a single access point. Mesh networking should be included in the Google Home or Amazon Echo.

These devices are designed to be placed out in the open and in common spaces, which is also the best placement for wi-fi routers. Including a mesh networking extender in these devices would increase their appeal and encourage owners to buy more while also improving the owner’s wi-fi. Everyone wins.

Buying Eero seems like the logical play for Amazon or Google. The company already makes one of the best mesh networking products on the market. The products are well designed and packaged in small enclosures. Even if Google or Amazon doesn’t build the mesh networking bits directly into the speaker, it could be included in the speaker’s wall power supply allowing both companies to quickly implement it across its product lines and offer it as a logical add-on as a secondary purchase.

3.5mm optical output

I have several Dots hooked up to full audio systems thanks to the 3.5mm output. But it’s just two-channel analog, which is fine for NPR but I want more.

For several generations, the MacBook Pro rocked an optical output through the 3.5mm jack. I suspect it wasn’t widely used, which led to Apple cancelling it on the latest generation. It would be lovely if the Echo and Home had this option, too.

Right now, the digital connection would not make a large difference in the quality of the audio since the device streams at a relatively low bit-rate. But if either Google or Amazon decide to pursue higher quality audio like offered from Tidal, this would be a must-have addition to the hardware.

Outdoor edition

I spend a good amount of time outside in the summer and managed to install an Echo Dot on my deck. The Dot is not meant to be installed outside, and though my setup has survived a year outside, it would be great to have an all-weather Echo that was much more robust and weather resistant.

Here’s how I installed an Echo Dot on my deck. Mount one of these electrical boxes in a location that would keep the Echo Dot out of the rain. Pop out one of the sides of the box and fit the Dot inside the box. The Dot should be exposed and facing down. Plug in the power cable and 3.5mm cable through the hole in the side and run the audio to an amp like this to power a set of outside speakers. I used asphalt shingles to cover the topside of both devices to protect them from water dripping off the deck. This setup has so far survived a Michigan summer and winter.

I live outside a city and have always had speakers outside. From my Dot’s location under the deck, it still manages to pick up my voice allowing control of Spotify and my smart home while I’m around my yard. It’s a great experience and I wish Amazon or Google made a version of its smart speakers so more people could take their voice assistants outside.

Improved privacy

There’s an inherent creepiness with having devices always listening throughout your home. The Google Home Mini was even caught recording everything and sending the recordings back to Google. Consumers should have more options in how Amazon and Google handle the recorded data.

There should be an option to allow the user to opt out of sending recordings back to Amazon or Google even if concessions have to be made. If needed give the user the option of opting out of several features or let the user decide if the recordings should be deleted after a few days or weeks.

Consumers are soon going to be looking for this sort of control as the topic grows in intensity following Facebook’s blunder and it would be wise for Google and Amazon to get ahead of consumers’ expectations.

A new portable speaker

I use a Tap in my workshop and it does a fine job. But the cloth covering gets dirty. And I discovered it’s not durable after dropping it once. What’s worse, if the always-listening mode is activated, the speaker must be put back on its dock after 12 hours or the battery completely dies.

The Tap was one of the first Amazon Echo devices. Originally users had to hit a button to activate Alexa, but the company added voice activation after it launched. It’s a handy speaker but it’s due for an upgrade.

A portable Echo or Home needs to be all-weather, durable and easily cleanable. It needs to have a dock and built-in micro-USB port, and it must have voice activated control — bonus points if it can lock out unknown voices.

Improved accessibility features

Voice assistant devices are making technology more accessible than ever but there are still features that should be added. There are lots of people who have speech impairments who can hear perfectly well, but an Alexa Echo or Google Home won’t recognize their speech accurately at all.

Apple added this ability to Siri. Users can text it queries. The option is available on iOS 11 under the accessibility menu. The Google Home and Amazon Echo should have the same feature.

Users should be able to send text queries to Echo via their mobile phone (from within the Alexa app via a free form text-styled chatbot) and still listen to the response and still take advantage of all the skills and smart home integration. From a technical point of view, it would be trivial since it wouldn’t need any voice to text translation and it would increase the appeal of the device to a new market of shoppers.

Motion sensors

There are several cases where an included motion sensor would improve the user experience of a voice assistant.

A morning alarm could increase in intensity if motion isn’t detected — or likewise, it could be deactivated by sensing a set amount of motion. Motion detectors could also act as light switches, switching on lights if motion is detected and then switching off lights if motion is no longer detected. But there’s more, automatic lowering of volume if motion is not detected, additional sensors for alarms, and detecting users for HVAC systems.

Source: Tech Crunch

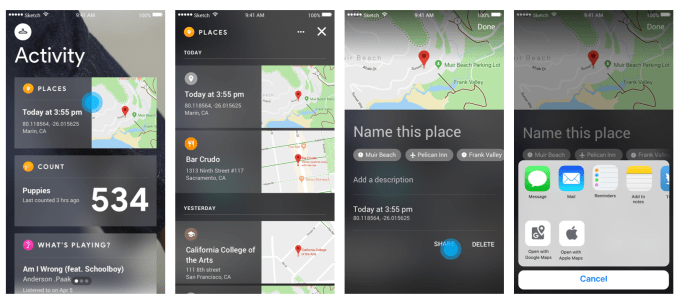

Because the devices send their GPS coordinates directly to each other, the team created a special compression algorithm just for that data — because if you want fine GPS, that’s actually quite a few digits that need to be sent along. But after compression it’s just a couple of bytes, making it possible to send it more frequently and reliably than if you’d just blasted out the original data.

Because the devices send their GPS coordinates directly to each other, the team created a special compression algorithm just for that data — because if you want fine GPS, that’s actually quite a few digits that need to be sent along. But after compression it’s just a couple of bytes, making it possible to send it more frequently and reliably than if you’d just blasted out the original data.