Spotify’s lack of full lyrics support and its minimal attention to voice are beginning to become problems for the streaming service. The company has been so focused on the development of its personalization technology and programming its playlists, it has overlooked key features that its competitors – including Apple, Google, and Amazon – today offer and are now capitalizing on.

For example, in the updated version of Apple Music rolling out this fall with iOS 12, users won’t just have access to lyrics in the app as before, they will also be able to perform searches by lyrics instead of only by the artist, album, or song title.

And Apple Music is actually playing catch up with Amazon on this front.

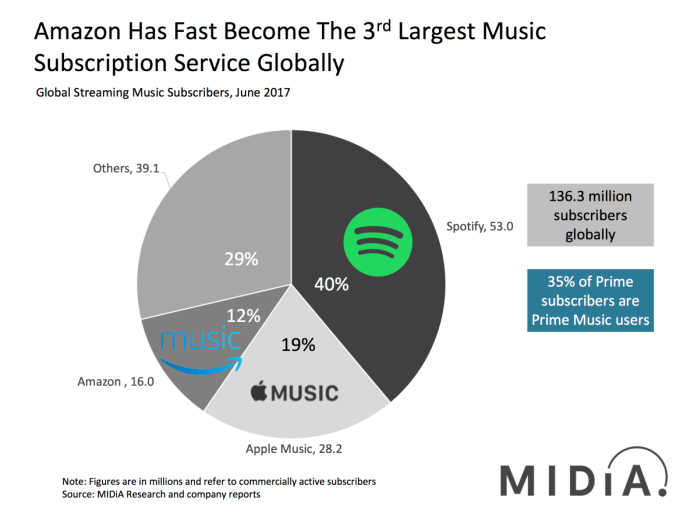

Amazon Music, which has quietly grown to become the third largest music streaming service, allows users to view the lyrics as songs play, and ties that to its Alexa voice platform. Amazon Music users with an Alexa device can also search for songs by lyrics just by saying “play the song that goes…”.

The company has been offering this capability for close to two years. While it had originally been one of Alexa’s hidden gems, today asking Alexa to pull up a song by its lyrics is considered a standard feature.

Though Google has lagged behind Apple, Spotify and Amazon in music, its clever Google Assistant is capable of search-by-lyrics, too. And as an added perk, it can also work like Shazam to identify a song that’s playing nearby.

With the rise of voice-based computing, features like asking for songs with verbal commands or querying databases of lyrics by voice are now expected features.

And where’s Spotify on this?

It has launched lyrics search only in Japan so far, and refuses to provide a timeline as to when it will make this a priority in other markets. Even tucked away in the app’s code are references to lyrics tests only in the non-U.S. markets of Thailand and Vietnam.

Those tests have been underway since the beginning of the year, we understand from sources. But the attention being given to these tests is minimal – Spotify isn’t measuring user engagement with the lyrics feature at this point. And Spotify CEO Daniel Ek wasn’t even aware his team was working on these lyrics tests, we heard, which implies a lack of management focus on this product.

Meanwhile, competitors like Apple and Amazon have dedicated lyrics teams.

We asked Spotify multiple times if it was currently testing lyrics in the U.S. (You can see one person who claims they gained access here, for example.) But the company never responded to our questions.

Image credit: Imgur via Reddit user spalatidium

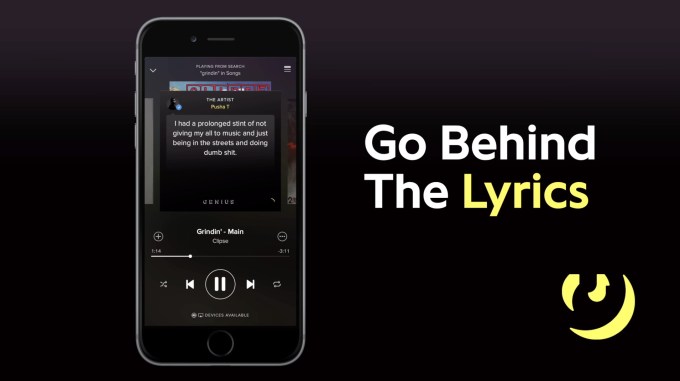

Some Spotify customers who largely listen to popular music may be confused about the lack of a full lyrics product in the app. That’s because Spotify partnered with Genius in 2016 to launch “Behind the Lyrics,” which offers lyrics and music trivia on a portion of its catalog. But you don’t see all the song’s lyrics when the music plays because they’re interrupted with facts and other background information about the song, the lyrics’ meaning, or the artist.

That same year, Spotify also ditched its ties with Musixmatch, which had been providing its lyrics support, as the two companies could no longer come to an agreement. There was expectation from users that lyrics would return at some point – but only “Behind the Lyrics” emerged to fill the void.

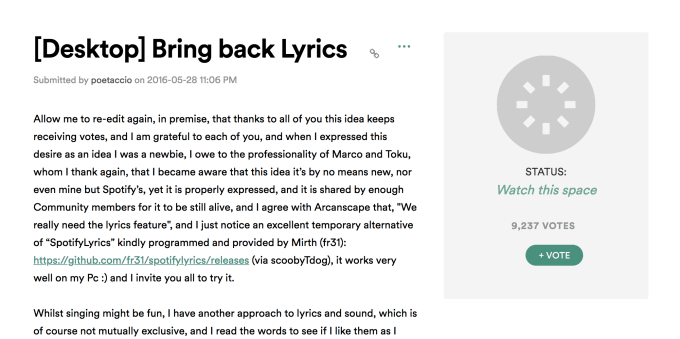

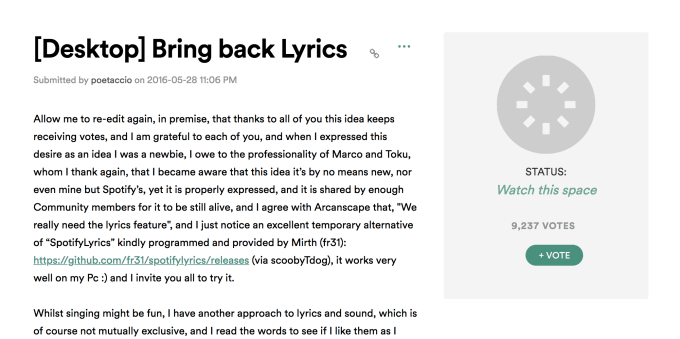

Demand for a real lyrics feature remains strong, though. Users regularly post on social media and Reddit about the topic.

A request for lyrics’ return is also one of the most upvoted product ideas on Spotify’s user feedback forum. It has 9,237 “likes,” making it the second-most popular request.

(The idea has been flagged “Watch this Space,” but it’s been tagged like that for so long it’s no longer a promise of something that’s soon to come.) There is no internal solution in the works, we understand, and it’s not working on a new deal with a third-party at this time.

The lack of lyrics is becoming a problem in other areas, as well, now that competitors are launching search-by-lyrics features that work via voice commands.

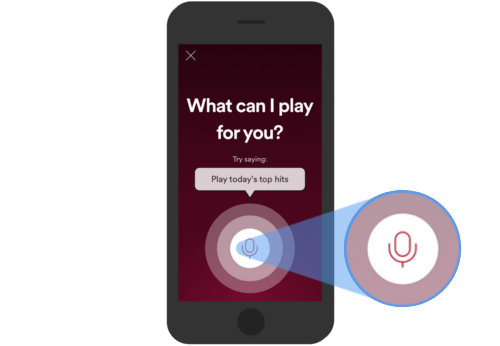

In fact, Spotify was late, in general, to address users’ interest in voice assistance – even though a primary use case for music listening is when you’re on the go – like, in the car, out walking or jogging, at the gym, biking, etc.

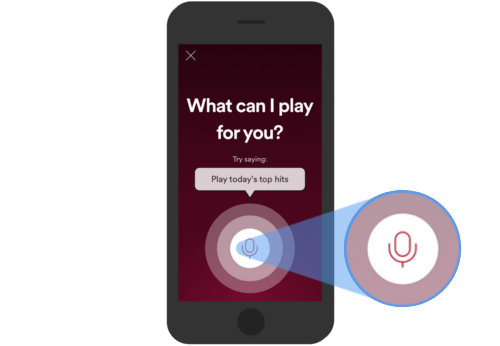

It only began testing a voice search option this spring, accessible through a new in-app button. Now rolled out to mobile users on Spotify Premium, the voice search product works via a long-press on the Search button in the app. You can then ask Spotify to play music, playlists, podcasts, and videos.

But the feature is still wonky. For one thing, hiding it away as a long press-triggered option means many users probably don’t know it exists. (And the floating button that pops up when you switch to search is hard to reach.) Secondly, it doesn’t address the primary reason users want to search by voice: hands-free listening.

Meanwhile, iPhone/HomePod users can tell Siri to play music with a hands-free command; Google Assistant/Google Home users can instruct the helper to play their songs – even if they only know the lyrics. And Amazon Music’s Alexa integration is live on Echo speakers, and available hands-free in its Music app.

Even third-party music services like Pandora are tapping into the voice platforms’ capabilities to provide search by lyrics. For example, Pandora Premium launched this week on Google Assistant devices like the Google Home, and offers search-by-lyrics powered by Google Assistant.

Spotify can’t offer a native search-by-lyrics feature in its app, much less search-by-lyrics using voice commands option, because it doesn’t even have fully functional lyrics.

Voice and lyrics aren’t the only challenges Spotify is facing going forward.

Spotify also lacks dedicated hardware like its own Echo or HomePod. Given the rise of voice-based computing and voice assistants, the company has the potential to cede some portion of the market as consumers end up buying into the larger ecosystems provided by the main tech players: Siri/HomePod/Apple Music vs. Google Assistant/Google Home/Google Play Music (or YouTube Music) vs. Alexa/Echo/Amazon Music (all promoted by Prime).

For now, Spotify works with partners to make sure its service performs on their platforms, but Apple isn’t playing nice in return.

Elsewhere, Spotify may play – even by voice – but won’t be as fully functional as the native solutions. With Spotify as the default service on Echo devices, for example, Alexa can’t always figure out commands that instruct it to play music by lyrics, activity, or mood – commands that work well with Amazon Music, of course.

Other cracks in Spotify’s dominance are starting to show, too.

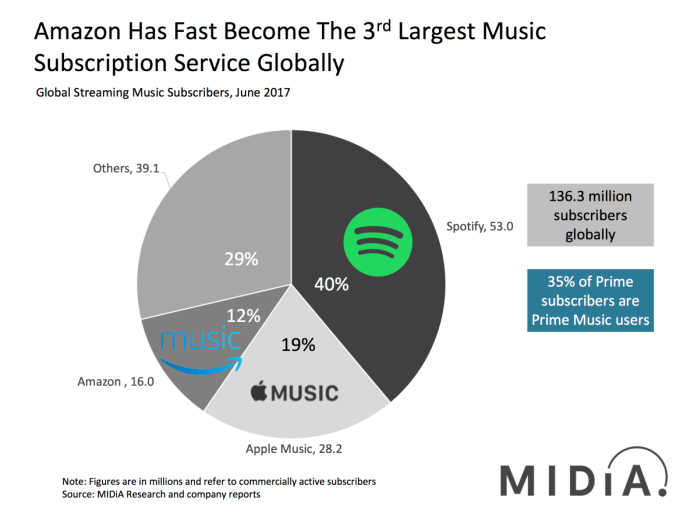

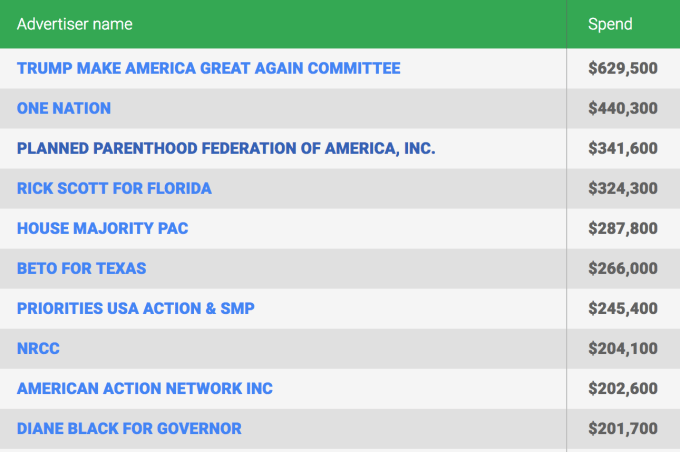

Amazon Music has seen impressive growth, thanks to adoption in four key Prime markets, U.S., Japan, Germany and the U.K.. With now 12% of the music streaming market, it has become the dark horse that’s been largely ignored amid discussions of the Amazon vs Spotify battle. But it’s not necessarily one to count out just yet.

YouTube Music, though brand new, has managed to snag Lyor Cohen as its Global Music Head, while Spotify’s latest headlines are about losing Troy Carter.

Meanwhile, Apple CEO Tim Cook just announced during the last earnings call that Apple Music has moved ahead of Spotify in North America. He also warned against ceding too much control to algorithms, in a recent interview, making a sensible argument for maintaining music’s “spiritual role” in our lives.

“We worry about the humanity being drained out of music, about it becoming a bits-and-bytes kind of world instead of the art and craft,” Cook mused.

Apple was late to music streaming, having been so tied to its download business. But it also had the luxury of time to get it right, knowing that its powerful iPhone platform means anything it launches has a built-in advantage. (And it’s poised to offer TV shows as a part of its subscription, too, which could be a further draw.)

How much time does Spotify have to get it right?

Despite these concerns, Spotify doesn’t need to panic yet – it still has more listeners, more paying customers, and more consumer mindshare in the music streaming business. It has its popular playlists and personalization features. It has its RapCaviar. But it will need to plug its holes to keep up where the market is heading, or risk losing customers to the larger platforms in the months ahead.

Source: Tech Crunch

On Vive and Oculus sets I always had the feeling that I was looking through a hole into the VR world — a large hole, to be sure, but having your peripheral vision be essentially blank made it a bit claustrophobic.

On Vive and Oculus sets I always had the feeling that I was looking through a hole into the VR world — a large hole, to be sure, but having your peripheral vision be essentially blank made it a bit claustrophobic. The resolution of the custom AMOLED display is supposedly 5K. But the company declined to specify the actual resolution when I asked. They did, however, proudly proclaim full RGB pixels and 16 million sub-pixels. Let’s do the math:

The resolution of the custom AMOLED display is supposedly 5K. But the company declined to specify the actual resolution when I asked. They did, however, proudly proclaim full RGB pixels and 16 million sub-pixels. Let’s do the math: The other major new inclusion is an eye-tracking system provided by Tobii. We knew eye-tracking in VR was coming; it was demonstrated at CES, and the Fove Kickstarter showed it was at least conceivable to integrate into a headset now-ish.

The other major new inclusion is an eye-tracking system provided by Tobii. We knew eye-tracking in VR was coming; it was demonstrated at CES, and the Fove Kickstarter showed it was at least conceivable to integrate into a headset now-ish.