Rob Blackie is a Digital Strategist based in London, England, who has contributed to The Guardian and The Independent newspapers.

More posts by this contributor

Facebook has had a terrible couple of years. Fake news. Cambridge Analytica. Charges of anti-semitism. Russia hacking the 2016 election. Racist memes, murders and lynchings in India, Myanmar and Sri Lanka.

And Facebook is just the tech company with the longest list of scandals. There’s Google, YouTube, and Twitter’s well-documented roles in radicalization to consider, not to mention growing global health crises caused by medical misinformation spread on all the major platforms.

Investors are rightly beginning to worry. If tech companies and their investors can’t foresee and stop these problems, it will likely lead to damaging regulation, costing them billions.

The rest of us are increasingly unhappy that internet giants refuse to take responsibility. The argument that the problem lies with third-party abuse of their tools is wearing thin, not just with the media and politicians, but increasingly with the public as well.

If the tech giants don’t want regulators to step in and police, they need to do much more to predict, and stop the abuse, before it even happens.

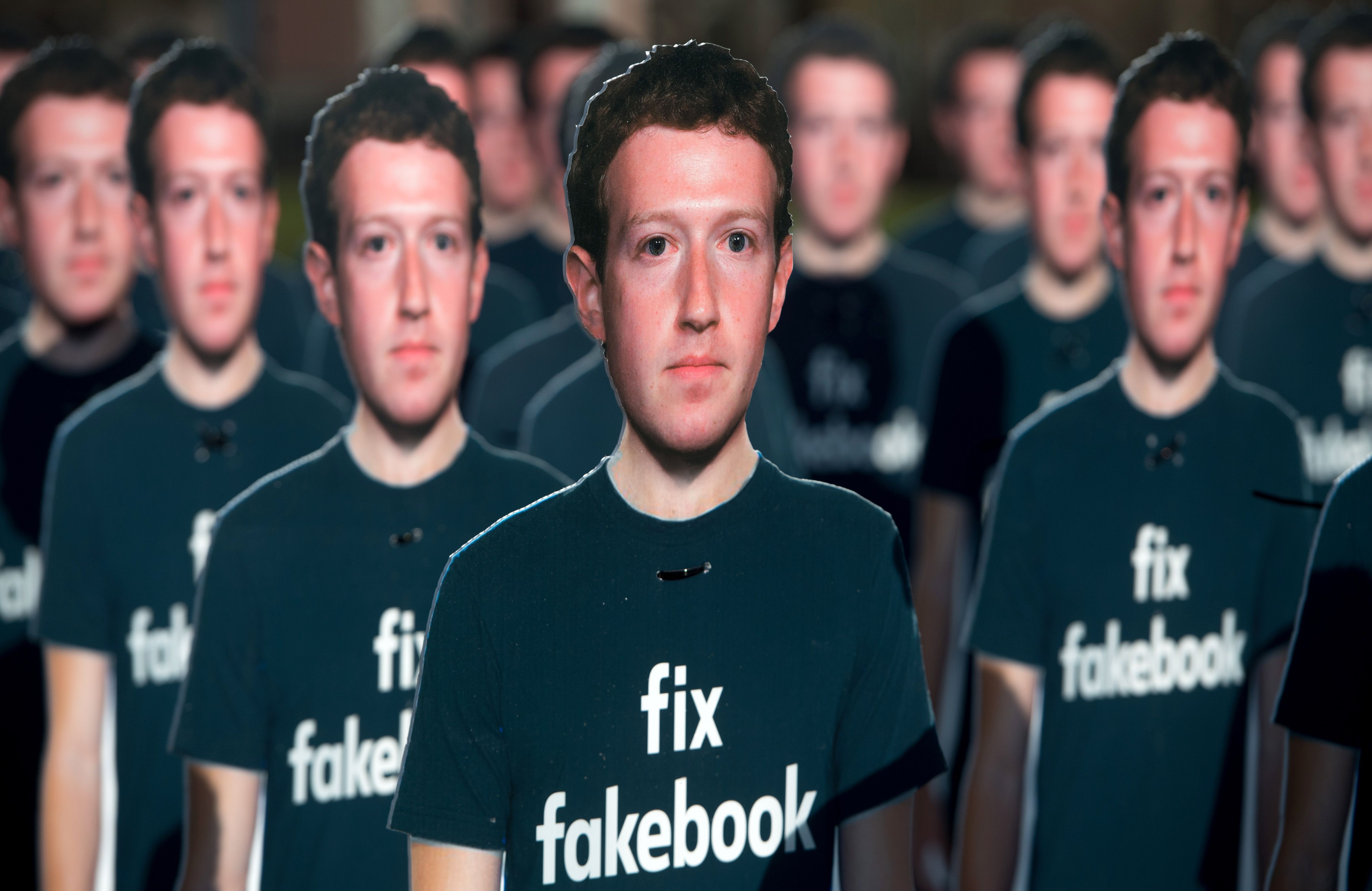

One hundred cardboard cutouts of Facebook founder and CEO Mark Zuckerberg stand outside the US Capitol in Washington, DC, April 10, 2018.

Advocacy group Avaaz is calling attention to what the groups says are hundreds of millions of fake accounts still spreading disinformation on Facebook. (Photo: SAUL LOEB/AFP/Getty Images)

The common factor in social media scandals

The problems mentioned above weren’t caused by anybody breaking existing social network rules. Nor were they about hacking data, in the true meaning of a ‘hack’ which involves stealing data.

These scandals are better understood as a political smear campaign. If you’ve seen a good political smear campaign, you’ll know smears are funny, interesting and shareable. Just like a good meme.

US President Lyndon Johnson was expert at smearing his opponents. As Hunter S Thompson reported:

“The race was close and Johnson was getting worried. Finally he told his campaign manager to start a massive rumor campaign about his opponent’s life-long habit of enjoying carnal knowledge of his barnyard sows.

“Christ, we can’t get away with calling him a pig-f****r,” the campaign manager protested. “Nobody’s going to believe a thing like that.”

“I know,” Johnson replied. “But let’s make the sonofab****h deny it.”

The fundamentals of a good smear campaign is what underlies many of the abuses we see on Facebook today. Sometimes the pernicious story has a kernel of truth. Sometimes it’s entirely rubbish. But that misses the point.

For a smear to work, it just has to be shared. Facebook, YouTube and Twitter all reward content that’s shared.<

More sharing, reaches more people directly – that’s the nature of social media.

Therein lies the problem. A story that’s funny and shocking (i.e. shareable) is more likely to be recommended by YouTube, is cheaper to advertise and indexes better on Google.

Of course, this has been understood for years. You can even buy guides to it.

Ryan Holliday’s hilarious and disturbing Trust Me I’m Lying, explains how he would graffiti his own client’s posters at night, to create controversy and shareability. Holliday would then anonymously share photos of these defaced posters into Facebook groups, forums and Twitter to stoke up fights, encouraging both sides to get outraged.

Outrage meant lots of sharing on social networks, and can even be a springboard into national media.

Nothing Holliday did was illegal, or even broke social network rules. But it was clearly abuse of social networks, and ultimately damaging to society, because it created controversy where none previously existed. What would you rather society concentrate on? Schools, jobs, hospitals, or the latest social media blowup?

Beyond purely stirring up controversy, there are also plenty of technical tricks to get your smears to take off. And social networks are good at finding things like fake profiles, data scraping and traditional hacking.

But new problems are constantly being created, and to regulators, it appears the tech companies aren’t learning from their mistakes.

Image courtesy TechCrunch/Bryce Durbin

Why is this?

Technology companies simply have the wrong culture to fix this sort of problem. Technologists inevitably focus on technical abuses. Employing armies of fact-checkers is an important response to fake news, but ask any political strategist how to tackle a smear. They won’t say ‘counter with the truth’, because that can embed the lie, rather than stop it. Consider politicians and pigs.

This is a different sort of abuse. Almost every important abuse mentioned here involves somebody using the tools in a way that nobody at Facebook anticipated.

Facebook built an incredible engine to use the data it knows about people, to improve advertising targeting. But that same engine can also be used by companies more commonly associated with military-style psychological warfare against populations and armies.

Technology companies are always going to struggle with social problems. Engineers have a different type of devious mind from political strategists and online conmen.

Luckily we already have a model for this.

White hat hackers have existed for decades. The internet giants reward smart ‘friendly’ hackers, and pay them to find and then help plug security holes.

Image courtesy TechCrunch/Bryce Durbin

Our proposal: ‘White hat’ Cambridge Analytica

All the social networks need to do, is to start paying bonuses to the world’s most devious political and social media strategists. People who would otherwise be using social networks for dodgy clients.

Pay them to find weaknesses in Facebook, just as tech companies pay bonuses for exposing technical flaws in software.

Everybody wins from this. Facebook would be able to fix problems before they are widely exploited. And as a bonus they would be diverting some consultants from a life of, almost, crime. Facebook’s investors would be reassured of fewer scandals, and less risk of costly regulation.

And the rest of us would benefit from Facebook starting to act its age.

Source: Tech Crunch