[Editor’s note: this is a free example of a series of articles we’re publishing by top experts who have cutting-edge startup advice to offer, over on Extra Crunch. Get in touch at ec_columns@techcrunch.com if you have ideas to share.]

Even the best growth marketers fail to get content marketing to work. Many are unwittingly using tactics from 4 years ago that no longer work today.

This post cuts through the noise by sharing real-world data behind some of the biggest SEO successes this year.

It studies the content marketing performance of clients with Growth Machine and Bell Curve (my company) — two marketing agencies who have helped grow Perfect Keto, Tovala, Framer, Crowd Cow, Imperfect Produce, and over a hundred others.

What content do their clients write about, how do they optimize that content to rank well (SEO), and how do they convert their readers into customers?

You’re about to see how most startups manage their blogs the wrong way.

Reference CupAndLeaf.com as we go along. Their tactics for hitting 150,000 monthly visitors will be explored.

Write fewer, more in-depth articles

In the past, Google wasn’t skilled at identifying and promoting high quality articles. Their algorithms were tricked by low-value, “content farm” posts.

That is no longer the case.

Today, Google is getting close to delivering on its original mission statement: “To organize the world’s information and make it universally accessible and useful.” In other words, they now reliably identify high quality articles. How? By monitoring engagement signals: Google can detect when a visitor hits the Back button in their browser. This signals that the reader quickly bounced from the article after they clicked to read it.

If this occurs frequently for an article, Google ranks that article lower. It deems it low quality.

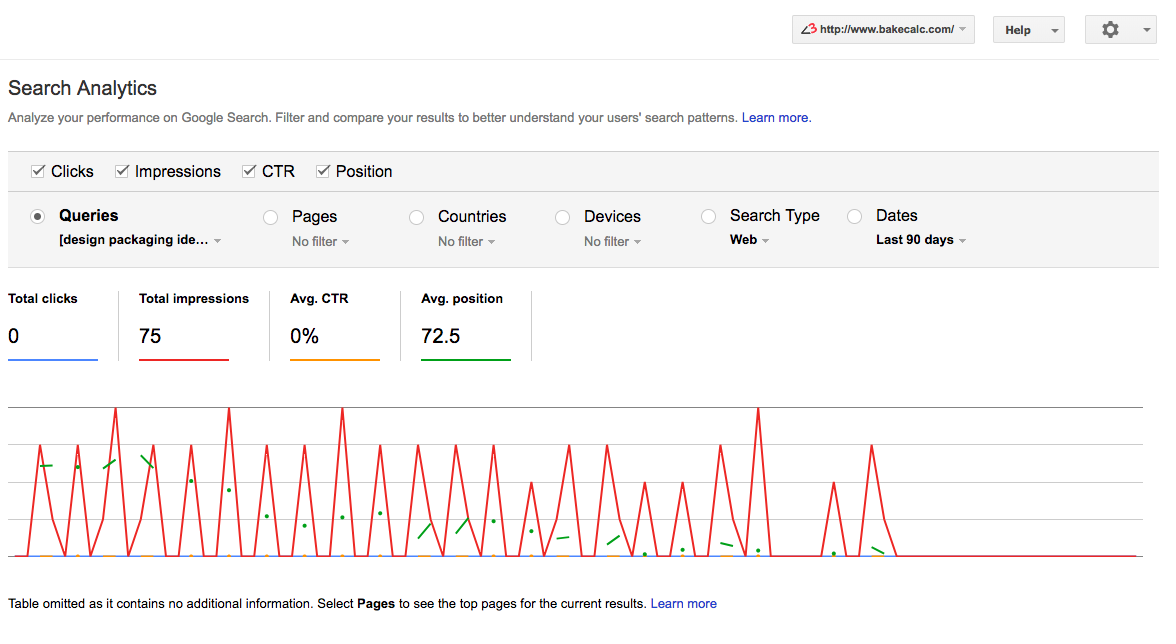

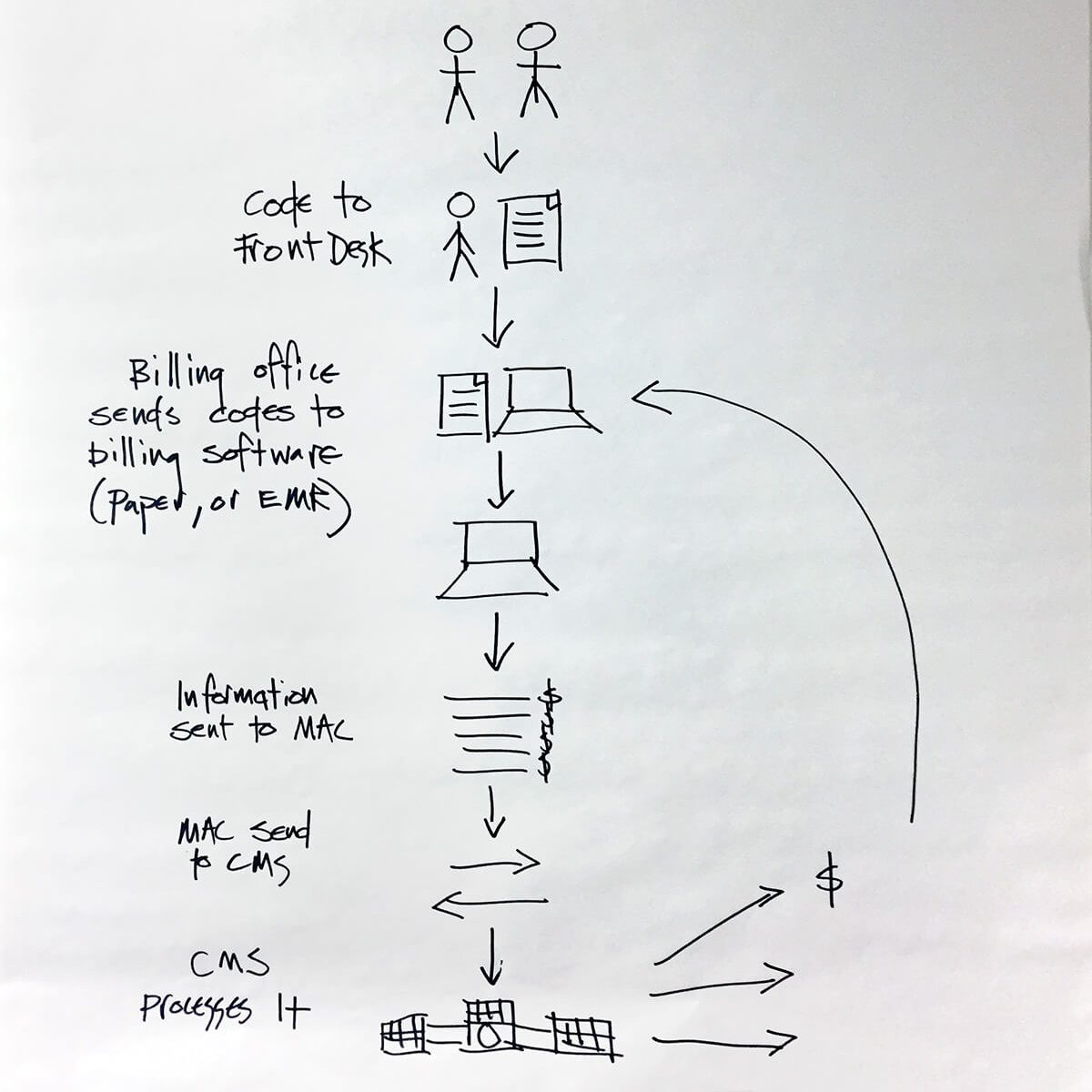

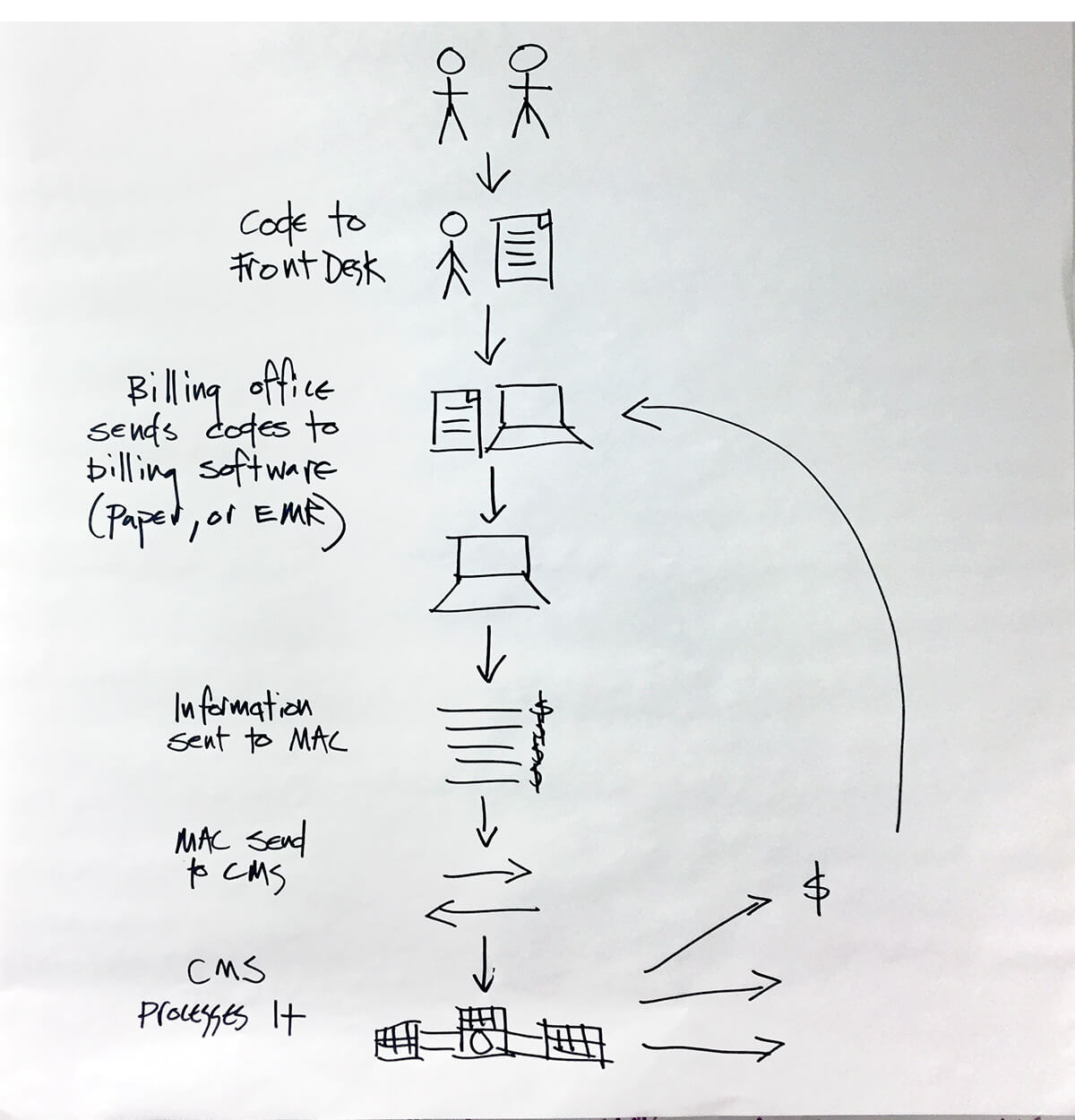

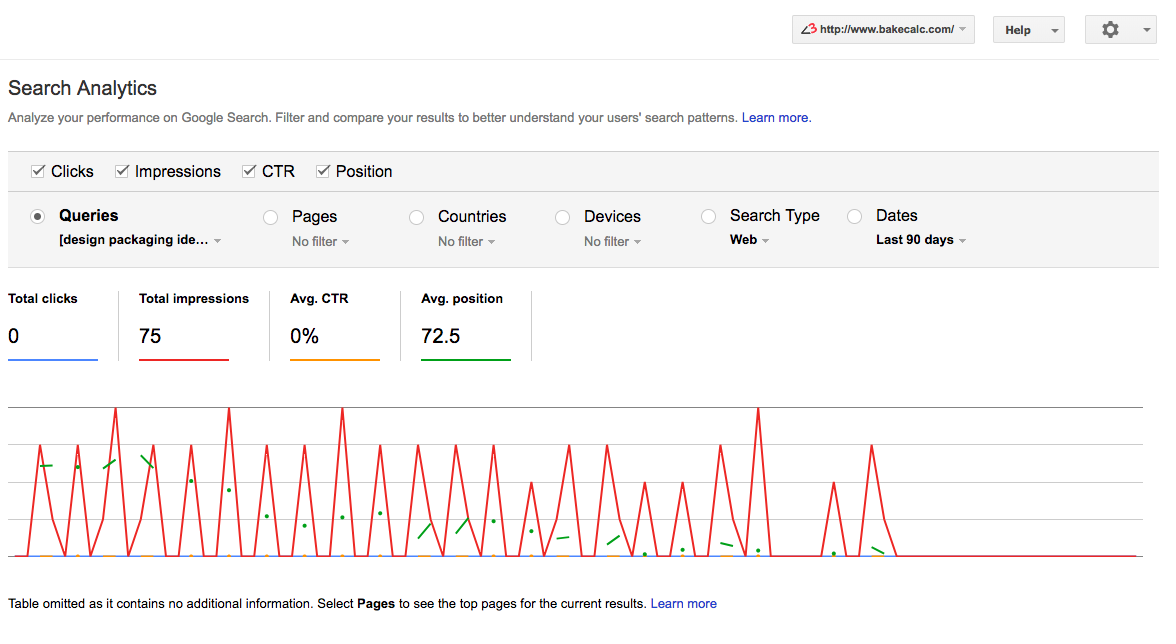

For example, below is a screenshot of the (old) Google Webmaster Tools interface. It visualizes this quality assessment process: It shows a blog post with the potential to rank for the keyword “design packaging ideas.” Google initially ranked it at position 25.

However, since readers weren’t engaging with the content as time went on, Google incrementally ranked the article lower — until it completely fell off the results page:

The lesson? Your objective is to write high quality articles that keep readers engaged. Almost everything else is noise.

In studying our clients, we’ve identified four rules for writing engaging posts.

1. Write articles for queries that actually prioritize articles.

Not all search queries are best served by articles.

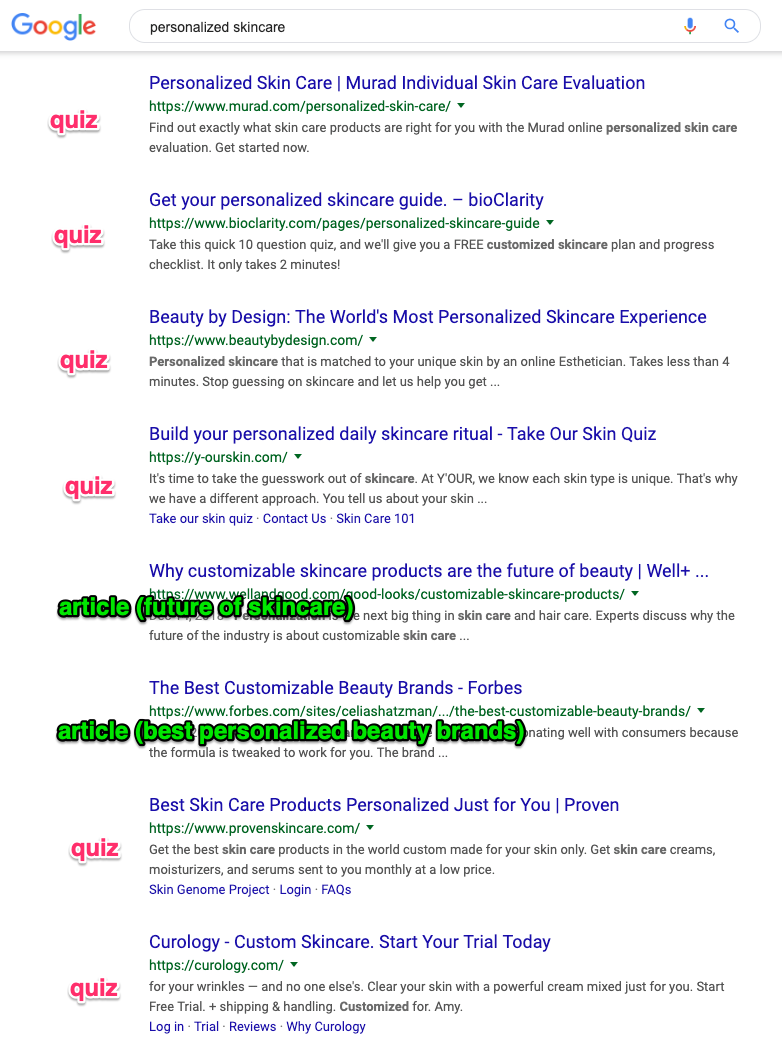

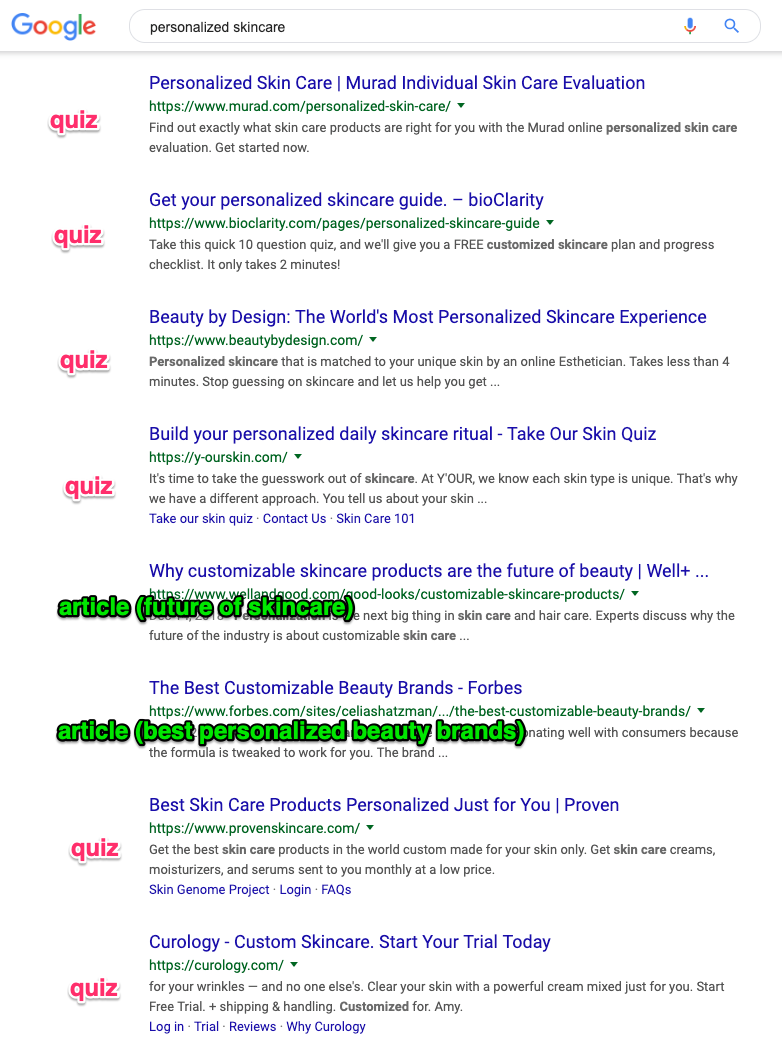

Below, examine the results for “personalized skincare:”

Notice that Google is prioritizing quizzes. Not articles.

So if you don’t perform a check like this before writing an article on “personalized skincare,” there’s a good chance you’re wasting your time. Because, for some queries, Google has begun prioritizing local recommendations, videos, quizzes, or other types of results that aren’t articles.

Sanity check this before you sit down to write.

2. Write titles that accurately depict what readers get from the content.

Are incoming readers looking to buy a product? Then be sure to show them product links.

Or, were they looking for a recipe? Provide that.

Make your content deliver on what your titles imply a reader will see. Otherwise, readers bounce. Google will then notice the accumulating bounces, and you’ll be penalized.

3. Write articles that conclude the searcher’s experience.

Your objective is to be the last site a visitor visits in their search journey.

Meaning, if they read your post then don’t look at other Google result, Google infers that your post gave the searcher what they were looking for. And that’s Google’s prime directive: get searchers to their destination through the shortest path possible.

The two-part trick for concluding the searcher’s journey is to:

Go sufficiently in-depth to cover all the subtopics they could be looking for.

Link to related posts that may cover the tangential topics they seek.

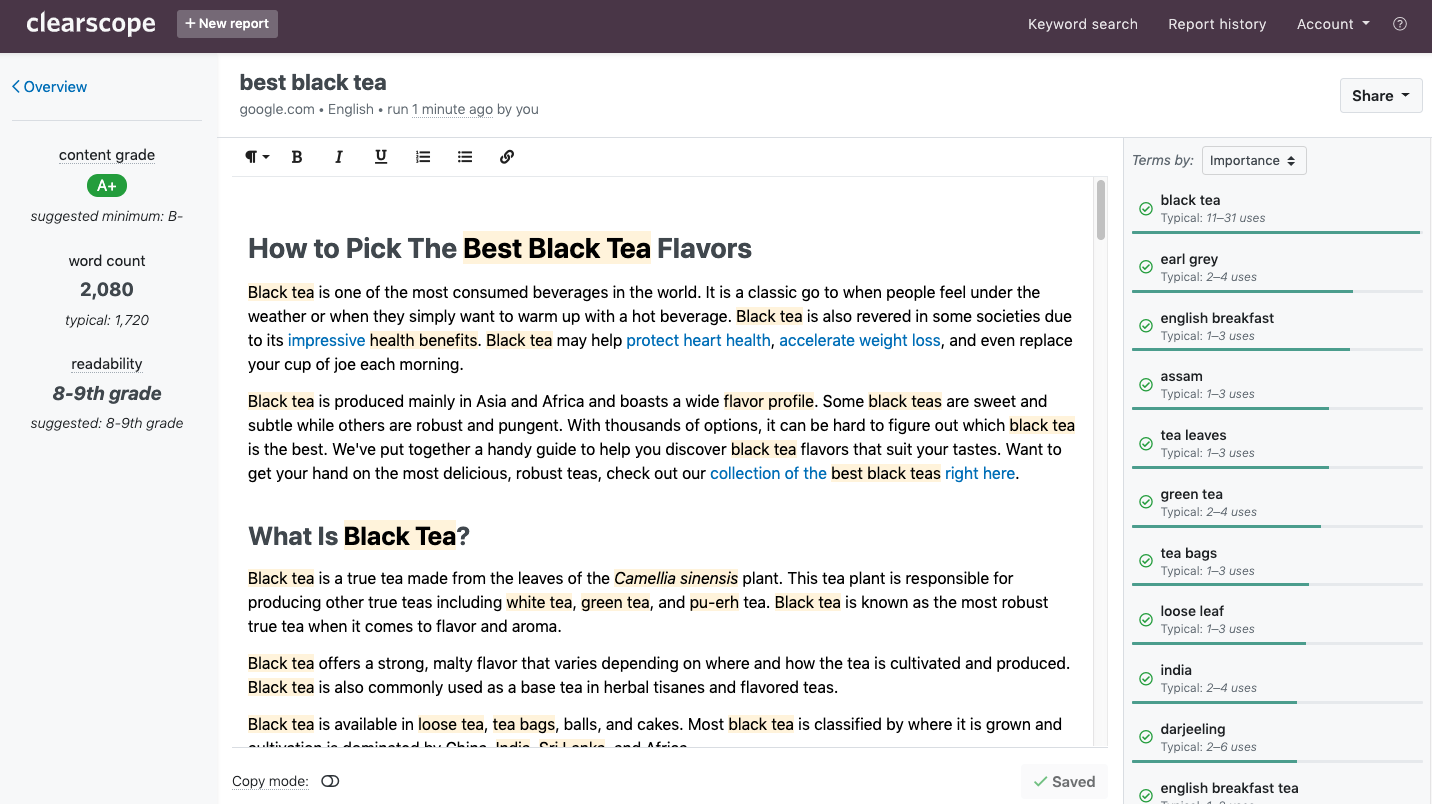

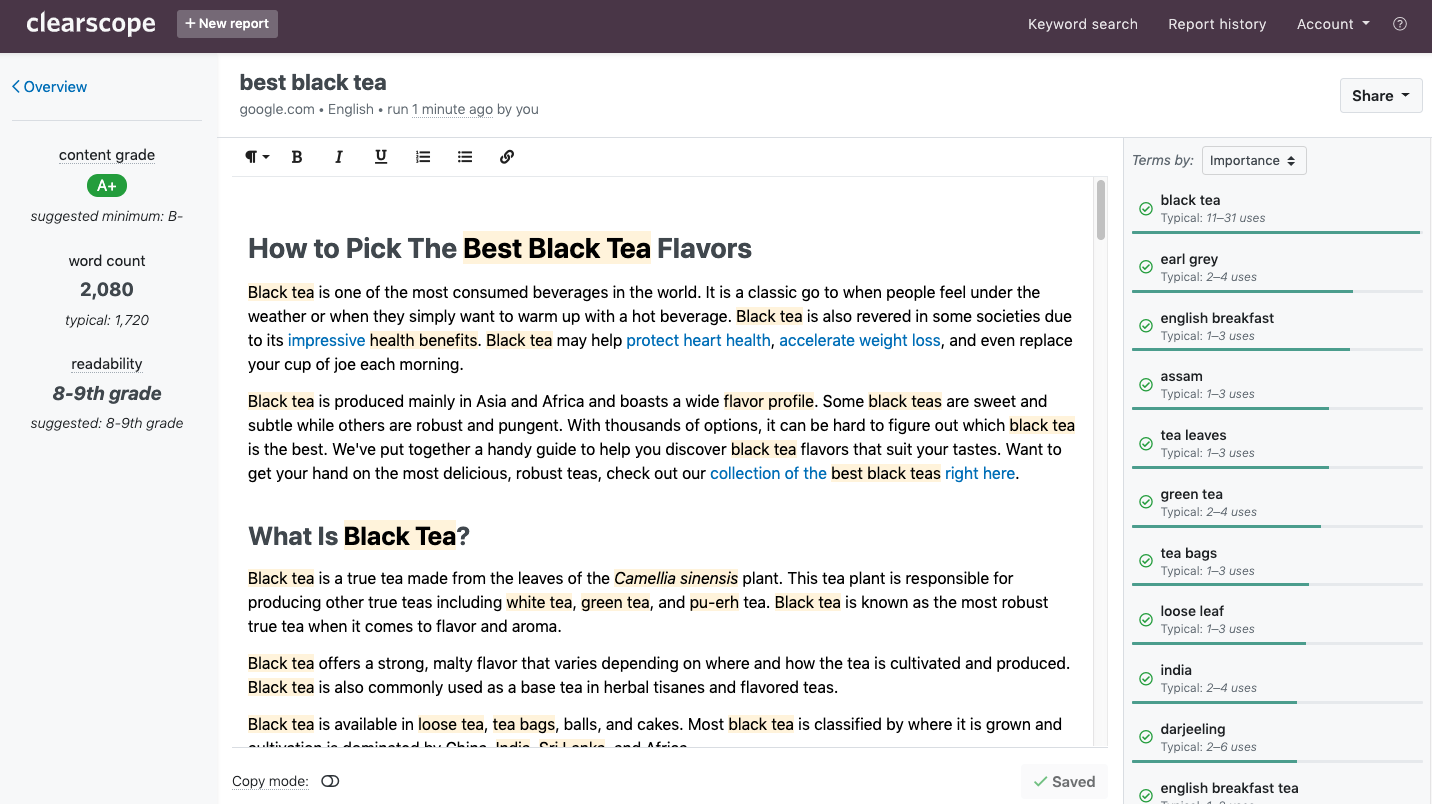

This is what we use Clearscope for — it ensures we don’t miss critical subtopics that help our posts rank:

4. Write in-depth yet concise content.

In 2019, what do most of the top-ranked blogs have in common?

They skip filler introductions, keep their paragraphs short, and get to the point.

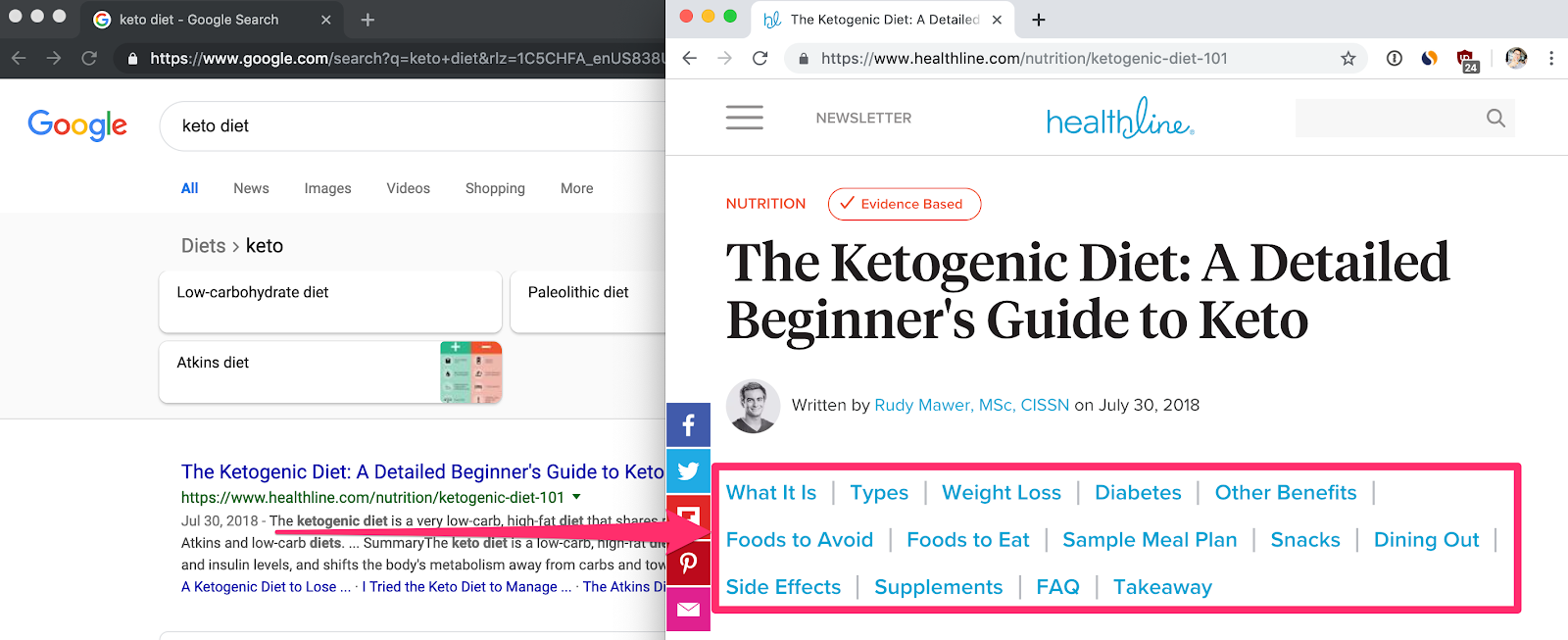

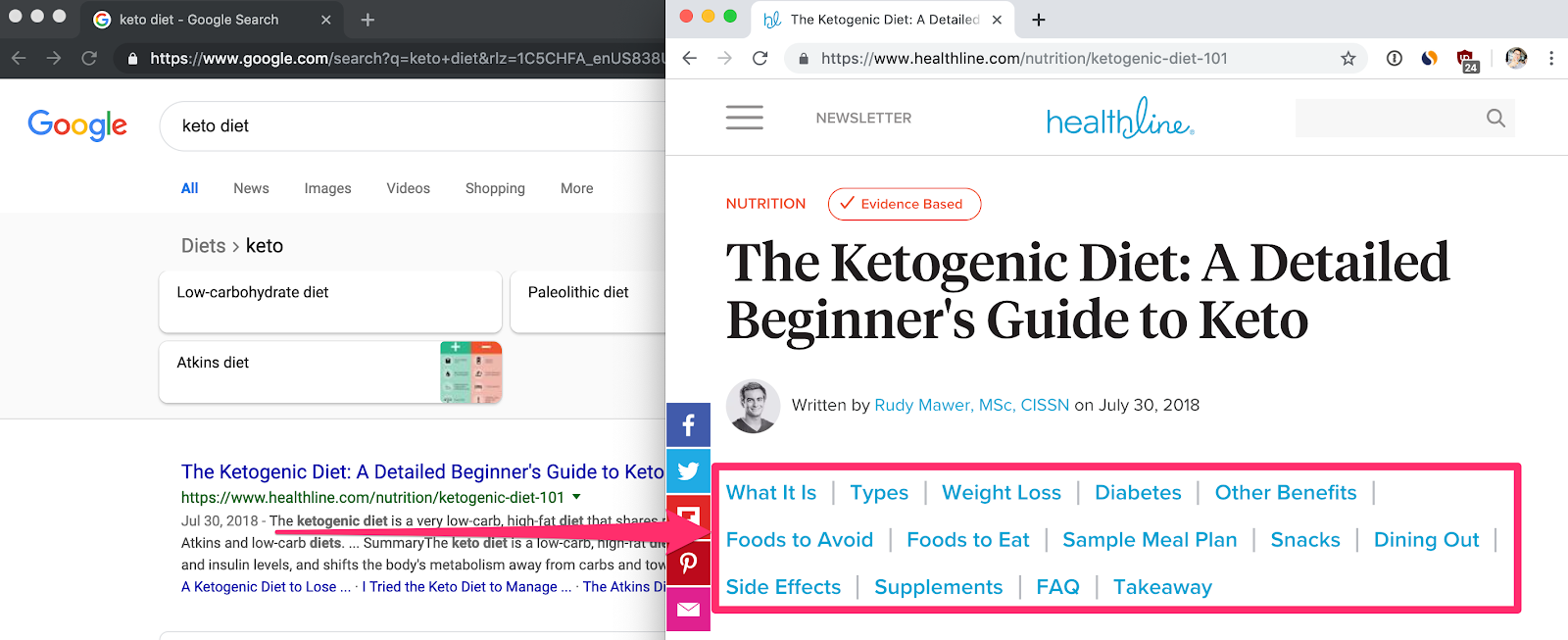

And, to make navigation seamless, they employ a “table of contents” experience:

Be like them, and get out of the reader’s way. All our best-performing blogs do this.

Check out more articles by Julian Shapiro over on Extra Crunch, including “What’s the cost of buying users from Facebook and 13 other ad networks?” and “Which types of startups are most often profitable?”

Prioritize engagement over backlinks

In going through our data, the second major learning was about “backlinks”, which is marketing jargon for a link to your site from someone else’s.

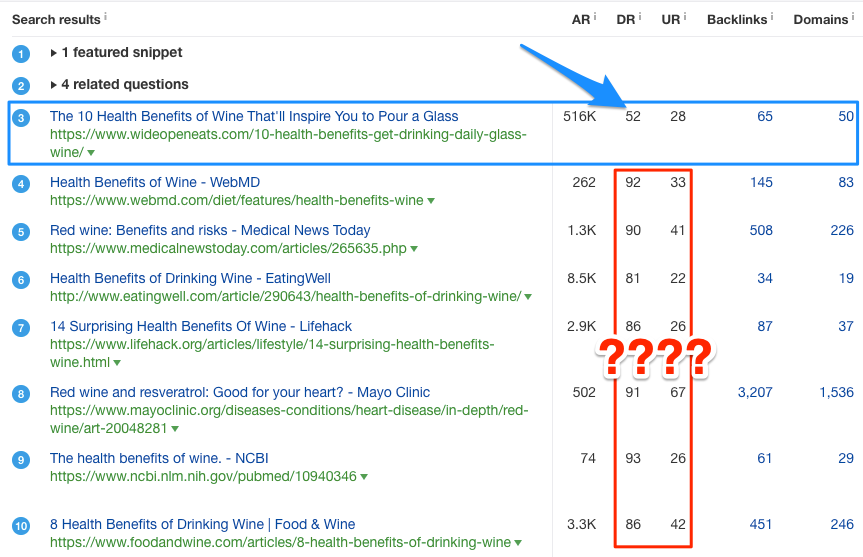

Four years ago, the SEO community was focused on backlinks and Domain Ranking (DR) — an indication of how many quality sites link to yours (scored from 0 to 100). At the time, they were right to be concerned about backlinks.

Today, our data reveals that backlinks don’t matter as much as they used to. They certainly help, but you need great content behind them.

Most content marketers haven’t caught up to this.

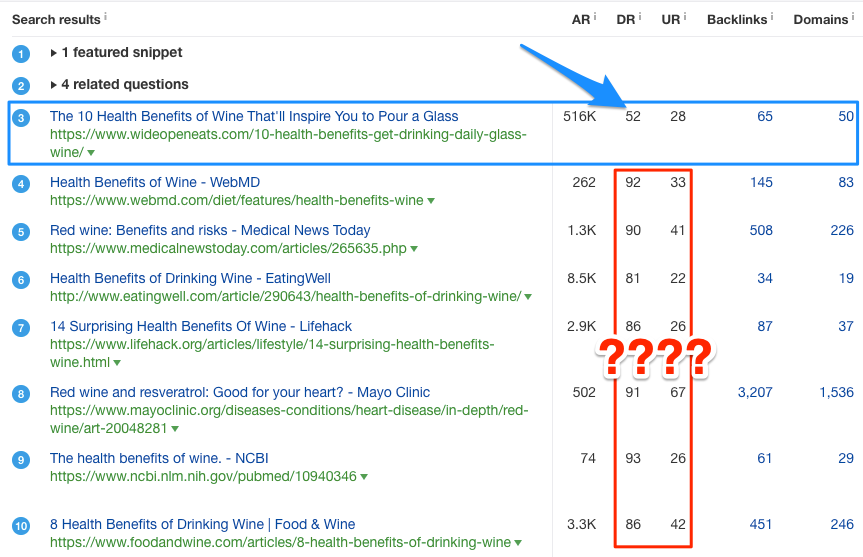

Here’s a screenshot showing how small publishers can beat out large behemoths today — with very little Domain Ranking:

The implication is that, even without backlinks, Google is still happy to rank you highly. Consider this: They don’t need your site to be linked from TechCrunch for their algorithm to determine whether visitors are engaged on your site.

Remember: Google has Google Analytics, Google Search, Google Ads, and Google Chrome data to monitor how searchers engage with your site. Believe me, if they want to find out whether your content is engaging, they can find a way. They don’t need backlinks to tell them.

This is not to say that backlinks are useless.

Our data shows they still provide value, just much less. Notably, they get your pages “considered” by Google sooner: If you have backlinks from authoritative and relevant sites, Google will have the confidence to send test traffic to your pages in perhaps a few weeks instead of in a few months.

Here’s what I mean by “test traffic:” In the weeks after publishing your post, Google notices them then experimentally surfaces them at the top of related search terms. They then monitor whether searchers engage with the content (i.e. don’t quickly hit their Back button). If the engagement is engaging, they’ll increasingly surface your articles. And increase your rankings over time.

Having good backlinks can cut this process down from months to a few weeks.

Prioritize conversion over volume

Engagement isn’t your end goal. It’s the precursor to what ultimately matters: getting a signup, subscribe, or purchase. (Marketers call this your “conversion event.”) Visitors can take a few paths to your conversion event:

Short: They read the initial post then immediately convert.

Medium: They read the initial post plus a few more before eventually converting.

Long (most common): They subscribe to your newsletter and/or return later.

To increase the ratio at which readers take the short and medium paths, optimize your blog posts’ copy, design, and calls to action. We’ve identified two rules for doing this.

1. Naturally segue to your pitch

Our data shows you should not pitch your product until the back half of your post.

Why? Pitching yourself in the intro can taint the authenticity of your article.

Also, the further a reader gets into a good article, the more familiarity and trust they’ll accrue for your brand, which means they’re less likely to ignore your pitch once they encounter it.

2. Don’t make your pitch look like an ad

Most blogs make their product pitches look like big, show-stopping banner ads.

Our data shows this visual fanfare is reflexively ignored by readers.

Instead, plug your product using a normal text link — styled no differently than any other link in your post. Woodpath, a health blog with Amazon products to pitch, does this well.

Think in funnels, not in pageviews

Finally, our best-performing clients focus less on their Google Analytics data and more on their readers’ full journeys: They encourage readers to provide their email so they can follow up with a series of “drip” emails. Ideally, these build trust in the brand and get visitors to eventually convert.

They “retarget” readers with ads. This entails pitching them with ads for the products that are most relevant to the topics they read on the blog. (Facebook and Instagram provide the granular control necessary to segment traffic like this.) You can read my growth marketing handbook to learn more about running retargeting ads well.

Here’s why retargeting is high-leverage: In running Facebook and Instagram ads for over a hundred startups, we’ve found that the cost of a retargeting purchase is one third the cost of a purchase from ads shown to people who haven’t yet been to our site.

Our data shows that clients who earn nothing from their blog traffic can sometimes earn thousands by simply retargeting ads to their readers.

Recap

It’s possible for a blog with 50,000 monthly visitors to earn nothing.

So, prioritize visitor engagement over volume: Make your hero metrics your revenue per visitor and your total revenue. That’ll keep your eye on the intermediary goals that matter: Attracting visitors with an intent to convert

Keeping those visitors engaged on the site

Then compelling them to convert

In short, your goal is to help Google do its job: Get readers where they need to go with the least amount of friction in their way.

Be sure to check out more articles from Julian Shapiro over on Extra Crunch, and get in touch with the Extra Crunch editors if you have cutting-edge startup advice to share with our subscribers, at ec_columns@techcrunch.com.

Source: Tech Crunch